Before delving into the discussion, it’s essential to clarify the concept of AI crawlers (also known as LLM crawlers), which can be roughly divided into two categories:

- Conventional crawler tools: Their results are directly used in LLM contexts. Strictly speaking, these have little to do with AI.

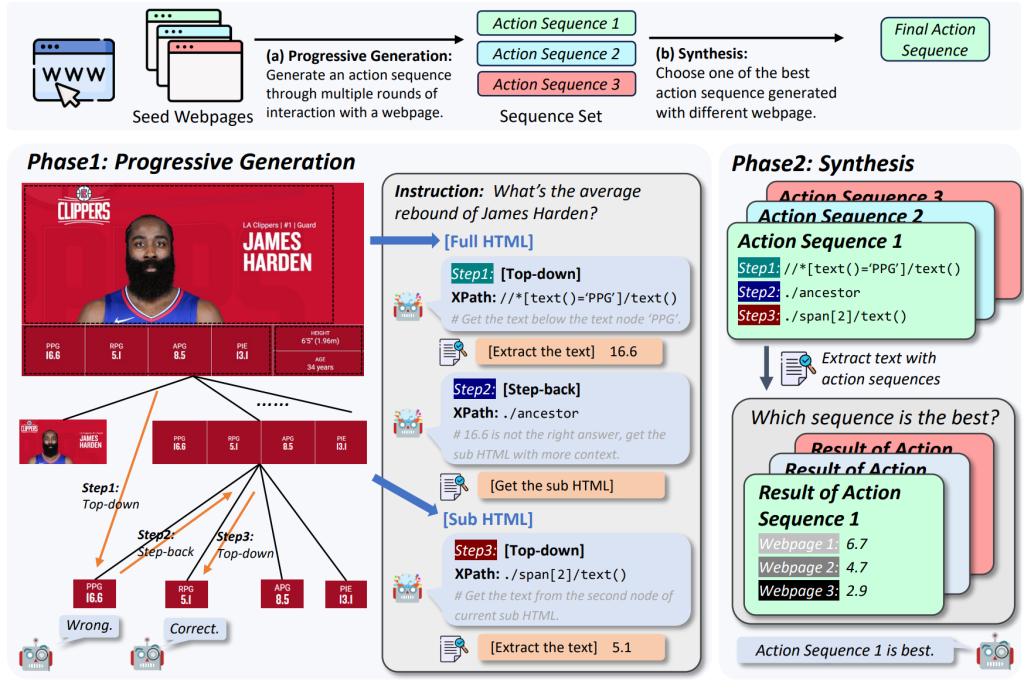

- LLM-driven new crawler solutions: Users specify data collection targets through natural language. The LLM then autonomously analyzes web structures, formulates crawling strategies, executes interactive operations to obtain dynamic data, and finally returns structured target content.

LLM-Driven Crawler Solutions

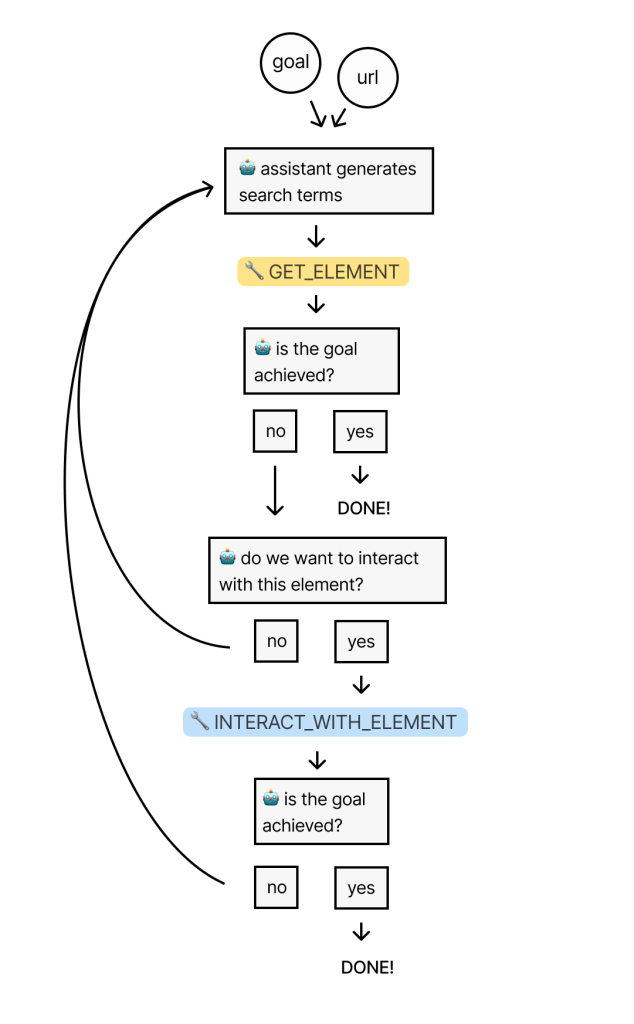

For insights into general AI-driven web crawlers, you can refer to this article, where the author provides a detailed walkthrough from conception to solution, including optimization and result analysis. Here, we’ll briefly introduce the process, which closely simulates human operational steps:

- Fetch the entire webpage’s HTML code.

- Use AI to generate a series of related terms (e.g., when searching for prices, AI generates related keywords like “prices,” “fee,” “cost,” etc.).

- Search the HTML structure based on these keywords to locate relevant node lists.

- Analyze the node lists with AI to identify the most pertinent nodes.

- Determine, using AI, whether interaction with the node is necessary (typically a click operation).

- Repeat the above steps until the final result is obtained.

Skyvern

Skyvern is a browser automation tool based on multimodal models, aiming to enhance workflow automation efficiency and adaptability. Unlike traditional automation methods that rely on specific website scripts, DOM parsing, and XPath paths—which are prone to failure when site layouts change—Skyvern analyzes visual elements in the browser window in real-time. Combined with LLM-generated interaction plans, it operates on unknown websites without custom code and is more resilient to layout changes. By integrating browser automation libraries like Playwright, it automates browser-based workflows through several key agents:

- Interactable Element Agent: Parses the webpage’s HTML structure and extracts interactive elements.

- Navigation Agent: Plans navigation paths needed to complete tasks, such as clicking buttons or entering text.

- Data Extraction Agent: Extracts data from webpages, capable of reading tables and text, and outputs data in user-defined structured formats.

- Password Agent: Fills in website password forms, reading usernames and passwords from password managers while protecting user privacy.

- 2FA Agent: Handles two-factor authentication (2FA) forms, intercepts 2FA requests, and obtains codes via user-defined APIs or waits for manual input.

- Dynamic Auto-complete Agent: Completes dynamic auto-complete forms, selecting appropriate options based on user input and form feedback, adjusting input as needed.

ScrapeGraphAI

ScrapeGraphAI automates the construction of scraping pipelines using large language models and graph logic, reducing the need for manual coding. Users simply specify the desired information, and ScrapeGraphAI handles single-page or multi-page scraping tasks efficiently. It supports various document formats like XML, HTML, JSON, and Markdown, offering multiple scraping methods, including:

- SmartScraperGraph: Achieves single-page scraping with user prompts and input sources.

- SearchGraph: Multi-page scraper that extracts information from top search results.

- SpeechGraph: Single-page scraper that converts website content into audio files.

- ScriptCreatorGraph: Creates Python scripts for extracted data from single-page scrapes.

- SmartScraperMultiGraph: Performs multi-page scraping with a single prompt and a series of sources.

- ScriptCreatorMultiGraph: Multi-page scraper that extracts information from multiple pages and sources, creating corresponding Python scripts.

ScrapeGraphAI simplifies the web scraping process, allowing individuals without deep programming knowledge to automate tasks by providing information requirements. It supports data extraction tasks ranging from single to multiple pages, offering various scraping pipelines to meet different needs, including information extraction, audio generation, and script creation.

Conventional Crawler Tools

These tools clean and convert regular online web content into Markdown format, enabling large models to better understand and process the data. When data is structured and presented in Markdown, LLMs respond with higher quality. The converted content serves as context for LLMs, allowing the model to answer questions by integrating online resources.

Crawl4AI

Crawl4AI is an open-source web crawler and data extraction framework designed for AI applications, allowing simultaneous crawling of multiple URLs, significantly reducing the time required for large-scale data collection. Key features of Crawl4AI in web crawling include:

- Multiple Output Formats: Supports JSON, minimal HTML, and Markdown.

- Dynamic Content Support: By customizing JavaScript code, Crawl4AI can simulate user behaviors like clicking “next page” buttons to load more dynamic content, handling common dynamic loading mechanisms like pagination and infinite scroll.

- Various Chunking Strategies: Supports chunking by topic, regular expressions, and sentences, enabling users to customize data according to specific needs.

- Media Extraction: Utilizes powerful methods like XPath and regular expressions, allowing precise targeting and extraction of desired data, including images, audio, and video—particularly useful for applications relying on multimedia content.